Releases: run-llama/llama_index

v0.10.10

v0.10.10

v0.10.8

v0.10.8

v0.10.7

v0.10.6

[0.10.6] - 2024-02-17

First, apologies for missing the changelog the last few versions. Trying to figure out the best process with 400+ packages.

At some point, each package will have a dedicated changelog.

But for now, onto the "master" changelog.

New Features

Bug Fixes / Nits

- Various fixes for clickhouse vector store (#10799)

- Fix index name in neo4j vector store (#10749)

- Fixes to sagemaker embeddings (#10778)

- Fixed performance issues when splitting nodes (#10766)

- Fix non-float values in reranker + b25 (#10930)

- OpenAI-agent should be a dep of openai program (#10930)

- Add missing shortcut imports for query pipeline components (#10930)

- Fix NLTK and tiktoken not being bundled properly with core (#10930)

- Add back

llama_index.core.__version__(#10930)

v0.10.5

v0.10.5

v0.10.3

v0.10.3

v0.10.1 (and V0.10.0)

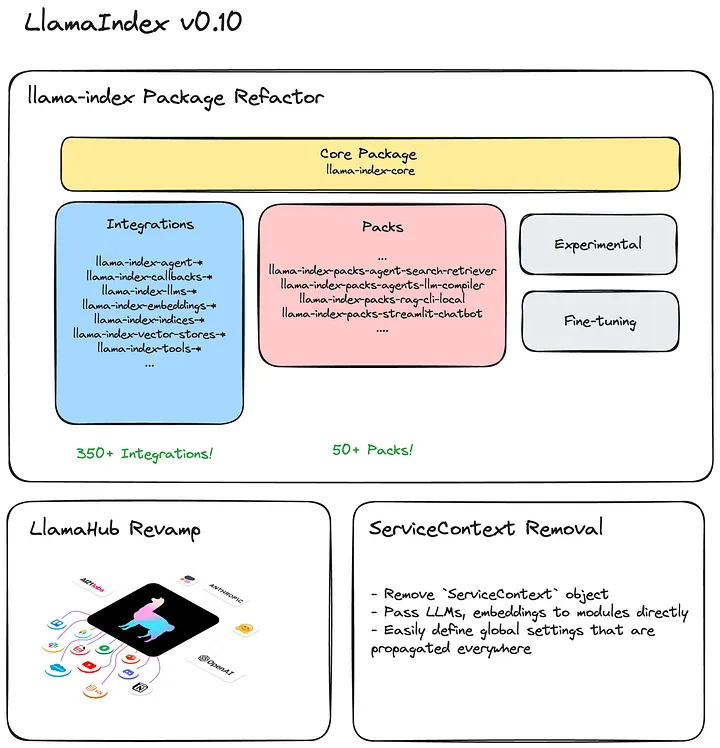

Today we’re excited to launch LlamaIndex v0.10.0. It is by far the biggest update to our Python package to date (see this gargantuan PR), and it takes a massive step towards making LlamaIndex a next-generation, production-ready data framework for your LLM applications.

LlamaIndex v0.10 contains some major updates:

- We have created a llama-index-core package, and split all integrations and templates into separate packages: Hundreds of integrations (LLMs, embeddings, vector stores, data loaders, callbacks, agent tools, and more) are now versioned and packaged as a separate PyPI packages, while preserving namespace imports: for example, you can still usefrom llama_index.llms.openai import OpenAI for a LLM.

- LlamaHub will be the central hub for all integrations: the former llama-hub repo itself is consolidated into the main llama_index repo. Instead of integrations being split between the core library and LlamaHub, every integration will be listed on LlamaHub. We are actively working on updating the site, stay tuned!

- ServiceContext is deprecated: Every LlamaIndex user is familiar with ServiceContext, which over time has become a clunky, unneeded abstraction for managing LLMs, embeddings, chunk sizes, callbacks, and more. As a result we are completely deprecating it; you can now either directly specify arguments or set a default.

Upgrading your codebase to LlamaIndex v0.10 may lead to some breakages, primarily around our integrations/packaging changes, but fortunately we’ve included some scripts to make it as easy as possible to migrate your codebase to use LlamaIndex v0.10.

v0.9.48

v0.9.48

v0.9.47

v0.9.47

v0.9.46

v0.9.46